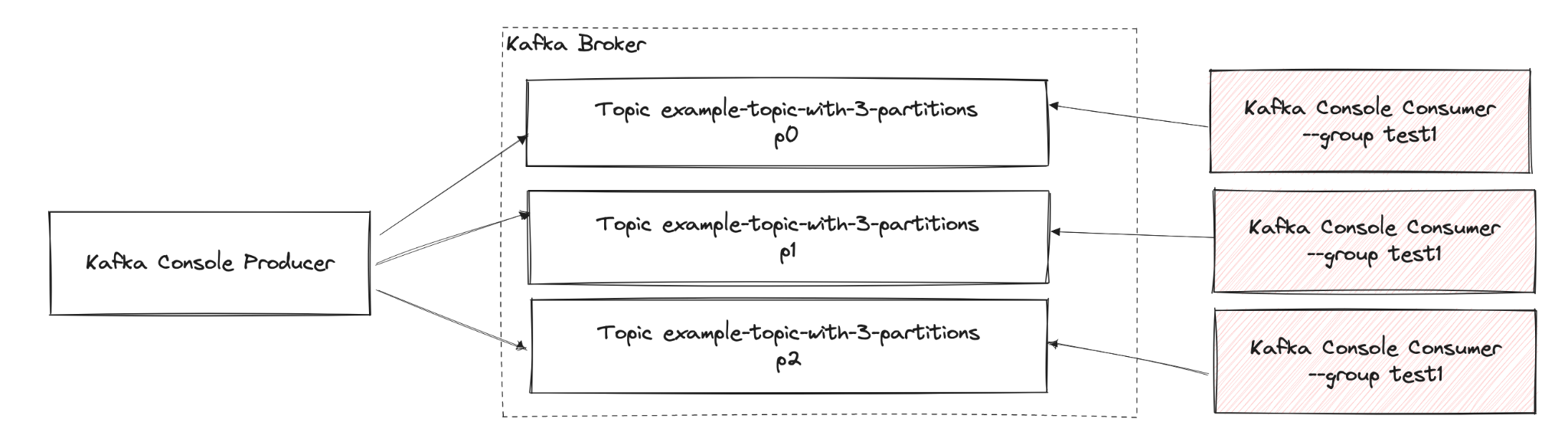

Multiple partitions

In this exercise, participants will gain hands-on experience with Apache Kafka, a powerful distributed streaming platform, using its Command Line Interface (CLI). Through a series of practical tasks, you will create topics, publish and consume messages, and explore key Kafka concepts such as partitions and consumer groups. The Kafka CLI provides essential tools for managing and interacting with your Kafka environment, enabling you to effectively monitor and control message flow. This session aims to enhance your understanding of Kafka’s architecture and its message processing capabilities, equipping you with the skills to leverage Kafka in real-world applications

Implementation

- Create a Topic with a larger number of partitions (e.g., 3)

./kafka-topics.sh --create --topic example-topic-with-3-partitions --bootstrap-server localhost:9092 --replication-factor 1 --partitions 3

- Check how many partitions the created Topic has

./kafka-topics.sh --describe --topic example-topic-with-3-partitions --bootstrap-server localhost:9092- Expected result: The Topic should have 3 partitions (

PartitionCount: 3)

- Run three Consumers working in one Consumer Group. Execute the command in three separate terminal windows:

./kafka-console-consumer.sh --topic example-topic-with-3-partitions --bootstrap-server localhost:9092 --group test1 --property print.key=true --property key.separator=" - "

- Check which Broker is chosen as the Coordinator of the Consumer Group

./kafka-consumer-groups.sh --bootstrap-server localhost:9092 --group test1 --describe --state

- Publish a few messages manually, specifying the key. Use the command below to publish several messages with the

same (the key and value will be separated by a colon):

./kafka-console-producer.sh --bootstrap-server localhost:9092 --topic example-topic-with-3-partitions --property "parse.key=true" --property "key.separator=:

- Check how the sent messages are consumed by consumers in the group

- Examine the partition assignment by consumers, paying attention to the

CONSUMER-IDcolumn:./kafka-consumer-groups.sh --describe --group test1 --bootstrap-server localhost:9092

- Close the process of one of the three running Consumers and check the partition assignment again:

./kafka-consumer-groups.sh --describe --group test1 --bootstrap-server localhost:9092

- Resend the messages with the same keys as before and check which Consumer is currently processing the messages

- Read the messages from the manually specified partitions 0, 1 and 2

./kafka-console-consumer.sh --topic example-topic-with-3-partitions --bootstrap-server localhost:9092 --from-beginning --property print.key=true --property key.separator="-" --partition 0./kafka-console-consumer.sh --topic example-topic-with-3-partitions --bootstrap-server localhost:9092 --from-beginning --property print.key=true --property key.separator="-" --partition 1./kafka-console-consumer.sh --topic example-topic-with-3-partitions --bootstrap-server localhost:9092 --from-beginning --property print.key=true --property key.separator="-" --partition- Analyze how the messages have been distributed across partitions

- Read the highest message offset in the Topic

./kafka-get-offsets.sh --bootstrap-server localhost:9092 --topic example-topic-with-3-partitions --time latest

- Read the lowest message offset in the Topic

./kafka-get-offsets.sh --bootstrap-server localhost:9092 --topic example-topic-with-3-partitions --time earliest

- Read messages starting from a manually specified Offset:

./kafka-console-consumer.sh --topic example-topic-with-3-partitions --bootstrap-server localhost:9092 --property print.key=true --property key.separator="-" --partition 2 --offset 5- Task: Count how many messages you have read from the given Offset, do we number the Offset from 0?

- Clear messages on the Topic example-topic-with-3-partitions without deleting it, use the script

./kafka-delete-records.sh- Create a file containing the specification of the messages to delete

delete-records.json - Define in it the partitions and which messages you want to delete

{

"partitions": [{

"topic": "example-topic-with-3-partitions",

"partition": 0,

"offset": here enter the last offset on the given partition (step 11)

}

],

"version": 1

}

- Run the script:

./kafka-delete-records.sh --bootstrap-server localhost:9092 --offset-json-file ./delete-records.json

- Create a file containing the specification of the messages to delete

- Check if the previously published messages have been deleted from Kafka:

./kafka-console-consumer.sh --topic example-topic-with-3-partitions --bootstrap-server localhost:9092 --from-beginning --property print.key=true --property key.separator="-"