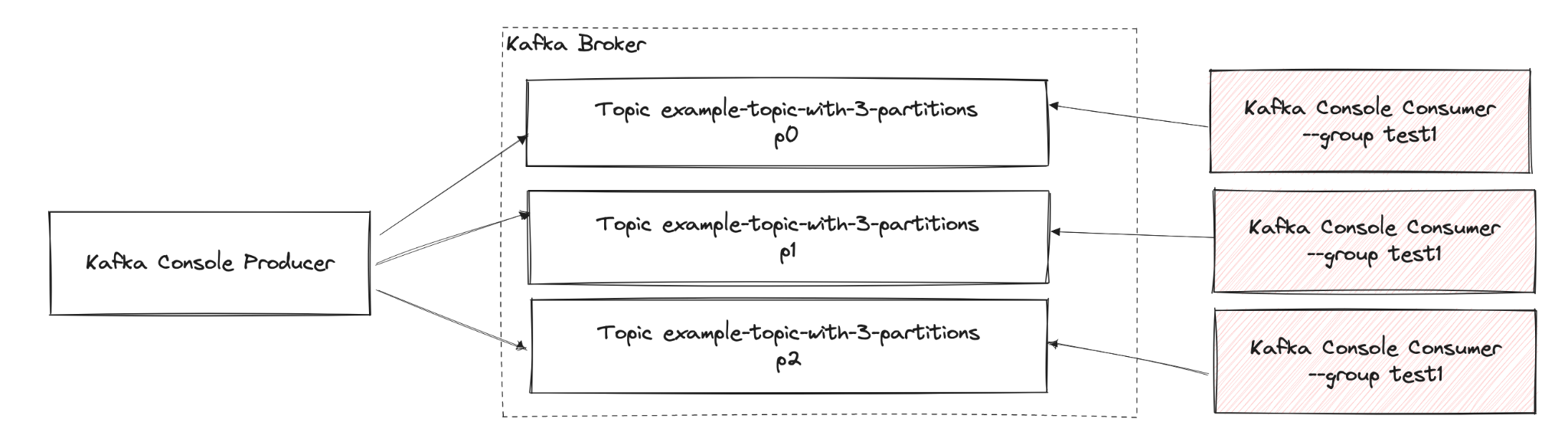

Multiple partitions

In this exercise, participants will explore the fundamental concepts of Kafka Topic Partitions, Consumer Group Offsets and message production and consumption. You will implement a custom Kafka producer and consumer, gaining hands-on experience in managing data flows and consumer groups, particularly in the context of handling multiple partitions. By following a structured series of steps, you will, create a topic with multiple partitions, and implement functionality to produce and consume messages efficiently across these partitions. This task will deepen your understanding of distributed messaging systems, enhance your skills in utilizing Kafka, demonstrate the benefits of partitioning for scalability and performance.

Implementation

- Clone repository https://github.com/pszymczyk/kafka-native-java-playground

- Checkout step2 branch

- In the PublishRunner class, line 35 create a ProducerRecord where you provide a random integer as the message key, and the string My favourite number is ${randomNumber} as the value

- Go to the SubscribeRunner class

- Implement the main(...) method in such a way that it launches 3 ConsumerLoop threads, each consumer should work in a group named step2

- Create 3 ConsumerLoop class objects - consumer0, consumer1, consumer2

- Use the constructor public ConsumerLoop(int id, String groupId, String topic)

- Create 3 Thread threads - consumer0Thread, consumer1Thread, consumer2Thread and run the consumer loops created in the previous point on them

- Open terminal, create Topic step2 with 10 partitions

- ./kafka-topics.sh --create --topic step2 --bootstrap-server localhost:9092 --replication-factor 1 --partitions 10

- Open IDE, run SubscribeRunner main(...)

- Open terminal, display all Consumer Groups

- ./kafka-consumer-groups.sh --list --bootstrap-server localhost:9092

- The list should include the Consumer Group defined in the SubscribeRunner file - step2

- Display the details of the Consumer Group

- ./kafka-consumer-groups.sh --describe --group step2 --bootstrap-server localhost:9092

- In the response, we get information about the current assignment of Consumers and Partitions, information about Offsets for particular Consumer Group

- Open IDE, run PublishRunner main(...) method to publish some messages

- Sent messages should appear in the application logs - PublishRunner and SubscribeRunner

- Once again, display the details of the Consumer Group, this time the information about Offsets on Partitions should have changed

- Stop the SubscribeRunner application

- Wait a moment

- Once again, display the details of the Consumer Group, this time we should read information about the Lag that appeared on the partitions

- Run the SubscribeRunner application again

- Once again, read the information about Offsets on the Consumer Group step2, the Lag should decrease to 0

- Stop the SubscribeRunner application

- Manually move the Offset to the smallest Offset that is on the Topic

- ./kafka-consumer-groups.sh --bootstrap-server localhost:9092 --group step2 --reset-offsets --to-earliest --topic step2 --execute

- Once again, read the information about Offsets on the Consumer Group step2, in the LAG column you should see values greater than zero